If you were a tree

Virtual reality opens up a novel perspective in storytelling and engagement. It’s what seeded our VR film projects TreeSense and Tree.

By Xin Liu and Yedan Qian

In

June of last year, we started a research project on understanding and

developing body ownership illusion in virtual reality (VR). A couple of

months later, our research grew into a VR narrative film we called TreeSense.

Then, in October, we started collaborating with movie directors to push

the project further and to develop a hyper-realistic VR film, called Tree.

All

this work is based on our belief that virtual reality (VR) offers new

ways of storytelling and engagement. Through systematic alteration of

the human body’s sensory stimuli, such as vision, touch, motor control,

and proprioception, our brains can be trained to inhabit different

entities. Virtually experiencing a different state of being can lead to a heightening of empathy, of appreciation for others’ realities.

To

showcase the power of this embodied storytelling method, we decided to

create a sensory-enhanced VR film in which a person inhabits a

tree — indeed becomes a

tree — seeing and feeling their arms as branches and their body as the

trunk. The audience members collectively share the experience of being a

tree throughout its life cycle — from a seed rising through the dirt,

to sprouting branches and growing to full size, until finally the tree

is destroyed by fire. We called this project TreeSense.

TreeSense cultivates Tree

While we were developing TreeSense,

we met VR film directors Milica Zec and Winslow Porter when they spoke

to our class at the Media Lab. At the time, and by an odd coincidence,

they were also developing a similar story about “being” a tree. It was a

very exciting bit of serendipity, and we decided to work together in

order to transform our ideas into the comprehensive VR film experience, Tree. In the Fluid Interfaces group, we took charge of the design and construction of the tactile experiences that are represented throughout Tree, while artist Jakob Steensen designed its stunning hyper-realistic visuals.

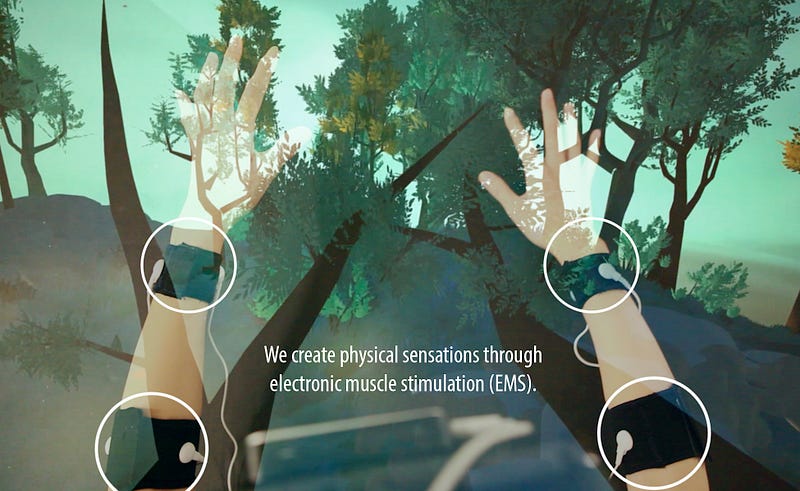

In both short films, TreeSense and Tree,

each viewer wears a VR headset and becomes immersed in a virtual

forest. But these projects differ in their tactile technologies. In TreeSense,

we rely on electronic muscle stimulation (EMS) to provide visuotactile

feedback. We’ve designed a series of EMS signals by varying combinations

of pulse amplitude, pulse width, current frequency, and the electrodes’

location. The exciting part of EMS is that we’re able to explore

uncommon body sensations, including feeling electricity underneath the

skin, or fingers moving involuntarily. Audience members can actually

sense their branches growing or getting poked by a bird. This

implementation of EMS is definitely experimental.

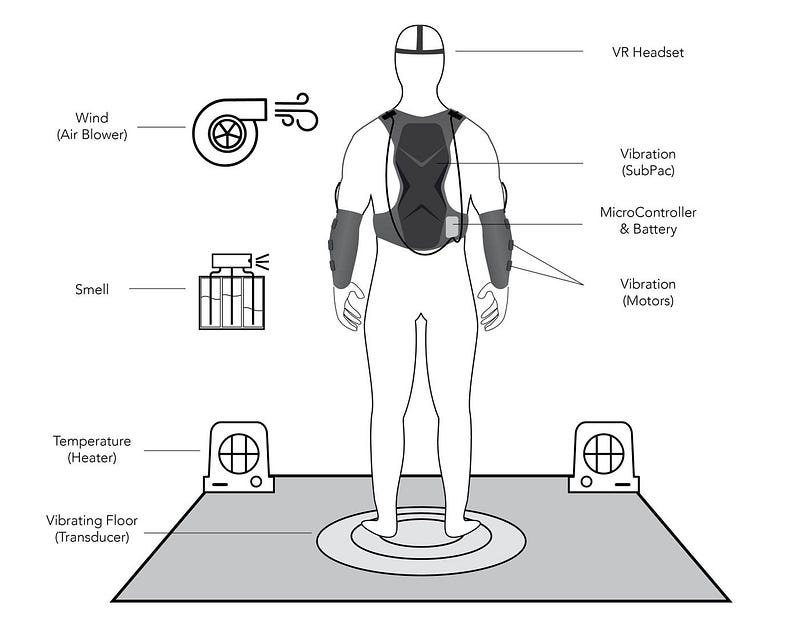

In Tree,

we follow a more conventional and mature approach because the project

has to work reliably for hundreds of people when it’s demonstrated in

public events such as film festivals in theaters. Mostly we use

commercial products, such as a Subpac for body vibration. We’ve also

customized localized vibration points on the arm, and used subwoofers in

constructing a floor that vibrates so users can actually feel the

rumblings of a thunderstorm. Whenever there’s thunder, lightning, or

fire in the VR, people also experience how those phenomena “touch” the

tree. We’ve also made use of an air mover to simulate the wind, and

added real-life heating in the space to simulate the fire that threatens

the “tree person.”

We see the film, Tree, as a polished extension of our project, TreeSense.

The VR movie was shown outside the academic environment and seen by

hundreds of audiences in 2017 festivals, including Sundance, TribeCa,

and TED: The Future You. At the Tribeca film festival, the immersive VR

experience of Tree also included the use of scents. The audience literally smelled the dirt, the rain forest, and the fire.

So

far, the presentations at film festivals and elsewhere have been very

well-received. We’ve seen audiences coming out of the VR film experience

in tears. It’s been exhilarating for us to witness the power of body

sensations in this new form of storytelling. People have told us they

really felt like the tree and found its destruction to be terrifying and

emotional. Meanwhile, TreeSense has just received an interaction prize in this year’s Core77 Design Awards and it will be shown at Design Dubai Week in the fall.

Challenges and expectations

Both our projects — Tree and TreeSense — experiment

with new methodologies for immersive participatory storytelling by

leveraging new technologies such as VR and tactile feedback mechanisms.

We’re aiming to evoke believable bodily experiences through electronic

muscle stimulation, vibration, temperature, and scents to unlock a

higher level of realism. As an audience member said after feeling

immersed in Tree, “You know it’s not real but your body really believes it!”

The

design of multi-sensory experiences is a complicated process of

composition and choreography. We constantly have to make sure the

experiences are perfectly synced, both in terms of timing and intensity.

There’s already high-fidelity visual and audio inside the VR headset,

but it’s not a trivial thing to add tactile elements to enhance the experience, and not distract or disturb the user.

In Tree,

we have multitrack bass audios for each part of the body vibration, so

that a person can feel the thunder, or the forest fire disturbance, or a

bird landing on a branch. The whole tactile experience is digitally

controlled by Max/MSP and Arduino software while communicating with the

Unreal engine through Open Sound Control protocol. The tactile,

olfactory, and temperature feedback in real life is precisely synced

with the visual experience inside the Oculus headset. We went through

various iterations to match the virtual visual details with the

intensity, texture, and timing of the physical experiences.

We believe that TreeSense and Tree

are tapping into more senses to help people connect with the narrative.

We expect that the intimate, visceral, and emotional VR experience also

has a real-world impact in that it helps users to develop a personal

and immediate identification with the natural environment and the need

to protect it.

In that sense, Tree

is an example of a multisensory VR platform that has vast potential

outside the research world. We’re convinced of the technology’s

potential value for diverse purposes in many areas, such as

telecommunication, active learning, and even medical applications. We

are actively exploring these possibilities now.

Xin Liu is a research assistant in the Fluid Interfaces group at the MIT Media Lab. She graduates with a master’s degree in August. Yedan Qian is a visiting student in Fluid Interfaces.

Acknowledgments:

- Advisor: Pattie Maes, head of the Fluid Interfaces research group at the MIT Media Lab.

- Collaborators: VR filmmakers Milica Zec and Winslow Porter.

This post was originally published on the Media Lab website.

Comments

Post a Comment